Right-Sizing Data Center Capital for Cloud Migration

The biggest current problem with data centers nowadays is poor capacity utilization (Shehabi et al. 2016). The most widely-cited example is that of comatose servers, in which 20-30% of servers in typical enterprise data centers are using electricity but doing nothing useful (Koomey and Taylor 2015). Beyond comatose servers, many more servers are used less than 5% of the year (Kaplan et al. 2008). A related problem is that many servers are far too powerful for the jobs they do, with more memory, processors, and hard drives than they can possibly use productively (I call this “over-provisioning”).

Until recently, this problem of poor capital utilization in the data center has been treated as an unavoidable cost of doing business (Koomey 2014). Most enterprise facilities (which I define as data centers run by companies whose primary business is not computing) suffer from these problems, largely because of institutional failures. For example, in enterprise data centers, the information technology department usually buys the computers but the facilities department buys the cooling and power distribution equipment and pays the electric bills. The business unit demanding the computers doesn’t care about the details, they just want to roll out their newest project. Those departments all have separate budgets and separate bosses, and rarely, if ever, optimize for the good of the company. In many cases, these companies can’t even tell you how many servers they have, never mind what their utilization is (Koomey 2016).

The big cloud computing providers have done a much better job than the enterprise data centers, with far fewer comatose servers, higher server utilization, and better matching of server capabilities to the loads they serve. These providers also track their equipment inventories much more carefully than do traditional enterprise facilities.

Data centers have become increasingly important to the US economy, and many more companies are confronting the massive waste of capital inherent in traditional ways of organizing and operating data centers. An increasing number are moving workloads to cloud computing providers, instead of building or expanding “in-house” data center facilities (Shehabi et al. 2016). As more consider this option, it’s important to understand the steps needed to make such a move in a cost-effective way.

Because of their economies of scale and other advantages, cloud providers can generally deliver computing services at lower costs than is possible in enterprise facilities, but because of poor capacity utilization in existing facilities, just mapping existing servers onto a cloud provider’s infrastructure will not necessarily lead to cost savings.

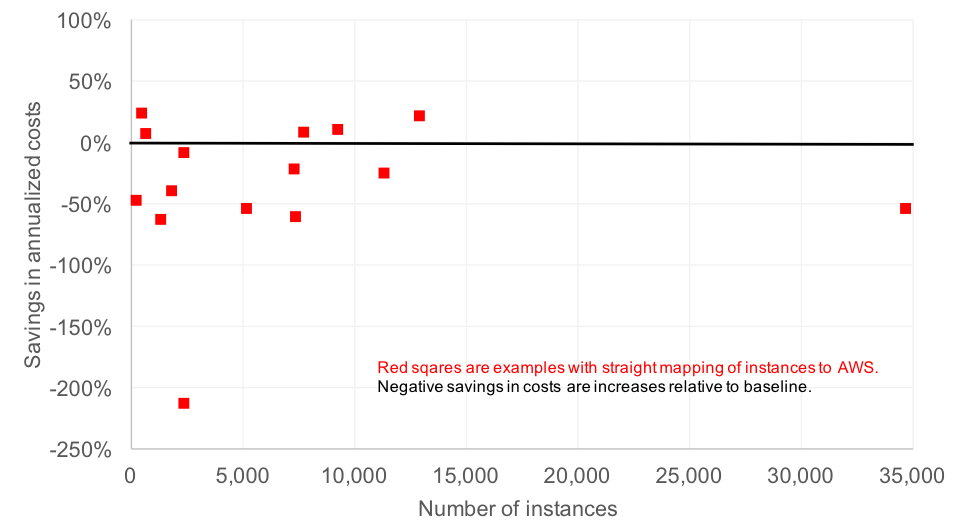

Consider Figure 1, which shows data from TSO Logic for data center installations of varying sizes, moving from a base case (a traditional data center) to a cloud computing facility, mapping those server “instances” on a one-for-one basis, without changing anything about them.

Server instances can be virtual or physical. In these case these are virtual instances, in which many instances run on a smaller number of physical machines. There are more than 100,000 virtual server instances in this data set.

Each red square represents a single installation (in one case, a single dot stands for five installations). The X-axis shows the number of instances in each installation, ranging from hundreds to almost 35,000. On the Y-axis, I’ve plotted the savings in annualized total data center costs when existing instances are mapped one-for-one onto the cloud, exactly replicating their utilization, the amount of memory, and the number of processors for each instance. These costs include cooling infrastructure capital, electricity, space, amortization, operating system licenses, and maintenance expenses.

Figure 1: Number of instances in each of 15 installations versus % savings to map them one-for-one into the cloud

The most important finding from this exercise is that a simple mapping of server instances to the cloud does not guarantee cost savings. In fact, only one third of installations show cost savings when doing such a mapping, with two thirds showing an increase in costs (i.e., negative savings).

The outlier in Figure 1 is the installation with about 2,000 instances and a negative savings (increase in cost) of more than 200% when workloads are mapped one-for-one onto the cloud. This case results from heavily over-provisioned instances combined with very low utilization for the vast majority of them.

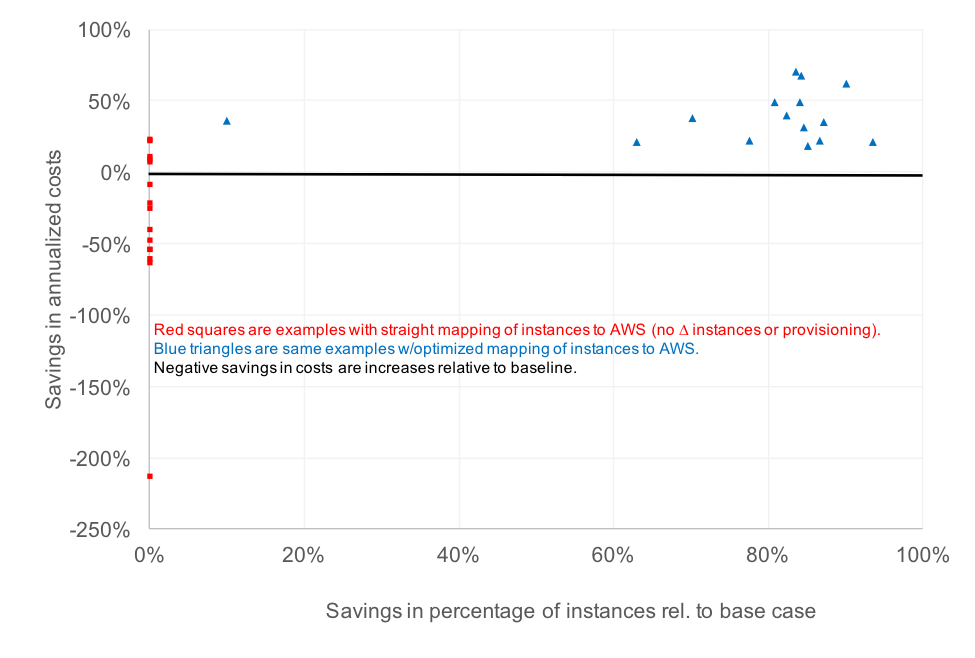

Now consider Figure 2, which shows the some of the same data as in Figure 1, but plotted in a slightly different way. On the X-axis, I’ve plotted the percentage reduction in the number of instances in moving from the base case to either the “one-to-one mapping” or optimized cases. In the first case, there is no change in the number of instances, so all of those red squares are plotted at 0% on the X-axis, with the savings in annual costs exactly matching those shown in Figure 1. In the second case, I’ve plotted blue triangles for the optimized mapping onto cloud resources, which in almost every case results in elimination of large fractions of the instances existing in the base case.

Figure 2: % annual cost savings to map 15 installations one-for-one into the cloud, compared to optimizing them and eliminating unused instances before transferring to the cloud

Interestingly, the optimized case shows that every one of the 15 installations show significant cost savings compared to the base case, which comes about by eliminating comatose servers, consolidating low utilization servers, and “right sizing” server instances to use the correct amounts of memory and processor power. In all but one example, the optimized case results in the elimination of 60 to 90% of server instances.

For the single example where only about 10% of instances are eliminated in the optimized case (the blue triangle that is far to the left of all the other triangles), cost savings were achieved by “right sizing” the server instances, with only a small reduction in the number of server instances. For this example, optimization turns a 9% cost increase in the “one-to-one mapping” case into about 36% savings, which is mostly due to right sizing of those instances.

It is important to note that the optimized case does not reflect re-architecting software design to better utilize modern computing resources, which can take months to years. This means the optimized case truly reflects the low hanging fruit that can be harvested quickly. Modernizing software design can yield substantial additional benefits.

In addition, the shift to cloud can result in more than cost savings. Modern tools for workloads run in the cloud can speed time to market and increase the rate of innovation (Schuetz et al. 2013). These benefits can swamp the direct cost savings, but are not tallied here.

Summary

Many organizations can benefit by shifting their computing workloads to the cloud, but a smart strategy is to optimize those workloads first before making the switch. The data reviewed here show that such optimization can yield substantial benefits (saving more than one third of annual data center costs) and make it most likely that the shift to cloud will be a successful one.

Gartner estimates that $170B/year is spent globally by business on “data center systems”.[1] If the shift to cloud computing can reduce these costs by even 25%, that means savings of about $40 billion flowing directly to companies’ bottom lines every year, which are big savings by any measure.

__________________________________________________________________

This report on TSO Logic’s data was conducted by Jonathan Koomey on his personal time. TSO Logic contributed the data (under NDA) and helped explain the data and calculations but did not contribute financially to the creation of this blog post.

[1] Gartner Forecast Alert: IT Spending, Worldwide, 3Q17 Update, 29 September 2017 [https://www.gartner.com/technology/research/it-spending-forecast/]

_____________________________________________________________________

References

Kaplan, James M., William Forrest, and Noah Kindler. 2008. Revolutionizing Data Center Efficiency McKinsey and Company.

Koomey, Jonathan. 2016. “Applying the Scientific Method in Data Center Management.” In Data Center Knowledge. March 9. [http://www.datacenterknowledge.com/archives/2016/03/09/applying-scientific-method-data-center-management/]

Koomey, Jonathan. 2016. “Three Pillars of Modern Data Center Operations.” In Data Center Knowledge. February 2. [http://www.datacenterknowledge.com/archives/2016/02/02/three-pillars-modern-data-center-operations/]

Koomey, Jonathan, and Jon Taylor. 2015. New data supports finding that 30 percent of servers are ‘Comatose’, indicating that nearly a third of capital in enterprise data centers is wasted. Oakland, CA: Anthesis Group. [http://anthesisgroup.com/wp-content/uploads/2015/06/Case-Study_DataSupports30PercentComatoseEstimate-FINAL_06032015.pdf]

Koomey, Jonathan, and Patrick Flynn. 2014. “How to run data center operations like a well oiled machine.” In DCD Focus. September/October 2014. pp. 81. [http://goo.gl/7sJHZb]

Koomey, Jonathan. 2014. Bringing Enterprise Computing into the 21st Century: A Management and Sustainability Challenge March 17, 2014. [http://www.corporateecoforum.com/bringing-enterprise-computing-21st-century-management-sustainability-challenge/]

Koomey, Jonathan. 2013. “Modeling the Modern Facility.” In Data Center Dynamics (DCD) Focus. November/December. pp. 102. [http://www.datacenterdynamics.com/focus/archive/2013/11/modeling-modern-facility]

Masanet, Eric, Arman Shehabi, and Jonathan Koomey. 2013. “Characteristics of Low-Carbon Data Centers." Nature Climate Change. vol. 3, no. 7. July. pp. 627-630. [http://dx.doi.org/10.1038/nclimate1786 and http://www.nature.com/nclimate/journal/v3/n7/abs/nclimate1786.html#supplementary-information]

Masanet, Eric R., Richard E. Brown, Arman Shehabi, Jonathan G. Koomey, and Bruce Nordman. 2011. "Estimating the Energy Use and Efficiency Potential of U.S. Data Centers." Proceedings of the IEEE. vol. 99, no. 8. August.

Schuetz, Nicole, Anna Kovaleva, and Jonathan Koomey. 2013. eBay: A Case Study of Organizational Change Underlying Technical Infrastructure Optimization. Stanford, CA: Steyer-Taylor Center for Energy Policy and Finance, Stanford University. September 26. [http://www.mediafire.com/view/8ema554a2ho9ifj/Stanford_eBay_Case_Study-_FINAL-130926.pdf]

Shehabi, Arman, Sarah Josephine Smith, Dale A. Sartor, Richard E. Brown, Magnus Herrlin, Jonathan G. Koomey, Eric R. Masanet, Nathaniel Horner, Inês Lima Azevedo, and William Lintner. 2016. United States Data Center Energy Usage Report. Berkeley, CA: Lawrence Berkeley National Laboratory. LBNL-1005775. June. [https://eta.lbl.gov/publications/united-states-data-center-energy]